BiRNN+Attention

完整代码在github

此处对于注意力机制的实现参照了论文 Feed-Forward Networks with Attention Can Solve Some Long-Term Memory Problems

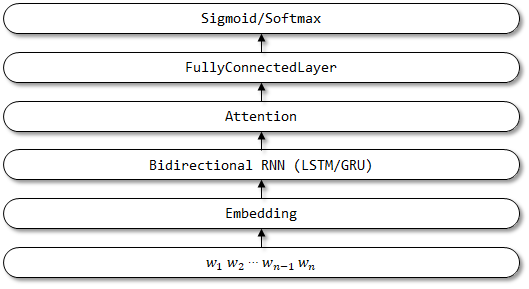

此处实现的网络结构:

基于tensorflow2.0的keras实现

自定义 Attention layer

这是tensorflow2.0推荐的写法,继承Layer,自定义Layer

需要注意的几点:

- 如果需要使用到其他Layer结构或者Sequential结构,需要在init()函数里赋值

- 在build()里面构建权重参数, 每个参数需要赋值name

- 如果参数不给name,当训练到第2个epoch时会报错:AttributeError: ‘NoneType’ object has no attribute ‘replace’

- 在call()里写计算逻辑

- 这里实现的Attention是将GRU各个step的output作为key和value,增加一个参数向量W作为query,主要是为了计算GRU各个step的output的权重,最后加权求和得到Attention的输出

1 | # -*- coding: utf-8 -*- |

自定义Model 构建

- 其中可以注意的是:允许定义Sequential来包裹常用block,比如下面的 point_wise_feed_forward_network()函数,包裹了n个全连接层。然后在自定义模型的init()里初始化使用

1 | # -*- coding: utf-8 -*- |