FM(Factorization Machines)

传统的LR算法是线性模型,想要提取非线性关系,要么通过GBDT来提取非线性特征,要么手动构建非线性特征。

FM直接显示的构建交叉特征,直接建模二阶关系:

公式如下:

其中$w_{ij}$是二阶关系的参数,其个数是$\frac{n(n-1)}{2}$,复杂度是$O(n^2)$

优化时间复杂度,矩阵分解提供了一种解决思路,$w_{ij} = \langle \mathbf{v}_i, \mathbf{v}_j \rangle$ 来代替上式。则:

其中,$v_i$是第 i 维特征的隐向量,⟨⋅,⋅⟩ 代表向量点积。隐向量的长度为 k(k«n),包含 k 个描述特征的因子。

直观上看,FM的复杂度是$O(kn^2)$,但通过数学化简,可以做到$O(kn)$,具体推导如下:

DeepFM

Paper [IJCAI 2017]:

DeepFM: A Factorization-Machine based Neural Network for CTR Prediction

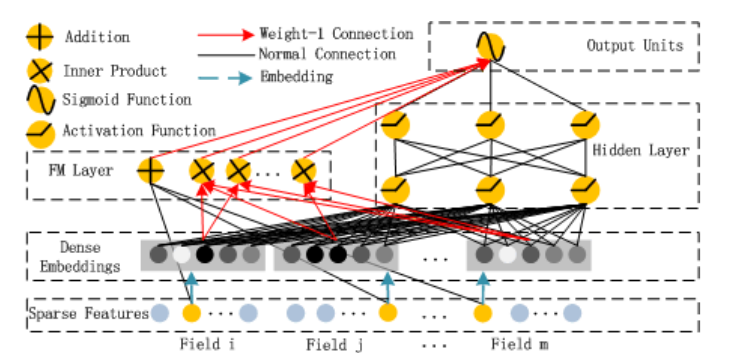

网络结构图

输入:input_idxs(n, f), input_values(n, f)

fm部分:根据特征id(input_idxs)进行embedding ==> (n, f, k),k为embedding向量维度,乘以input_values,在进行二阶特征交叉。 input_values连接一个Weight得到一阶特征表达。

deep部分:根据特征id进行embedding ==> (n, f, k),乘以input_values,在reshape成(n, f*k),在连接Dense

整体网络结构

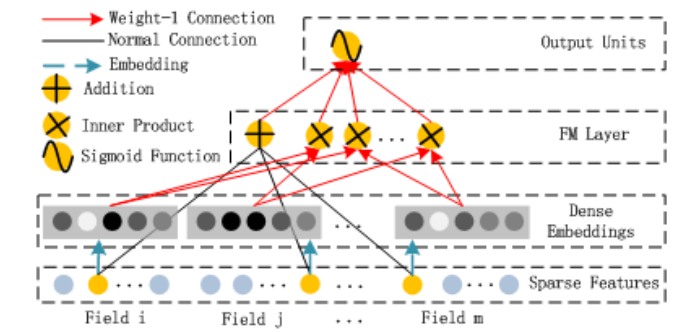

FM部分:

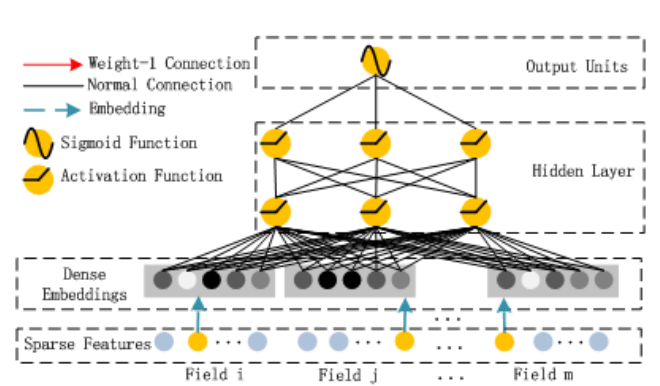

Deep部分:

代码实现(第一种)

数据处理

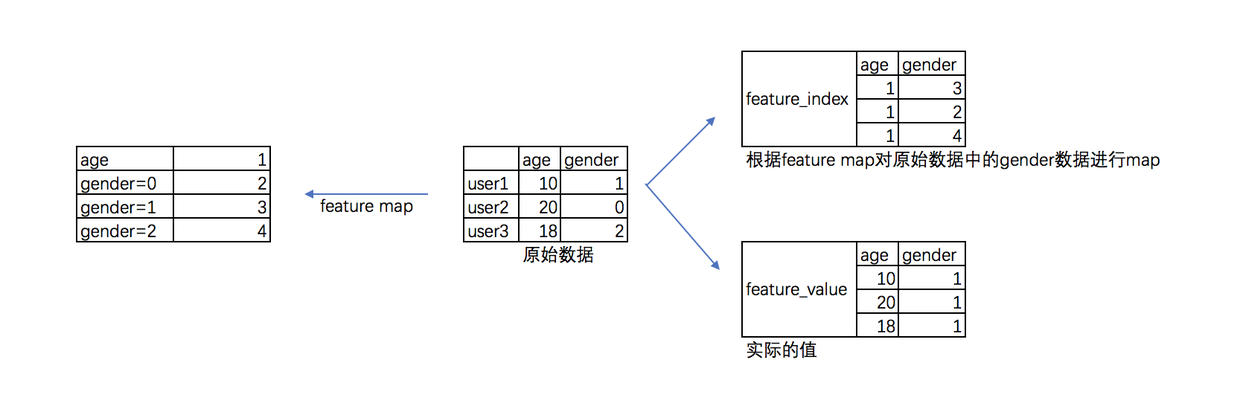

模型需要输入两个,input_idxs, input_values

- input_idxs是稀疏编码,即每一个分类型的field下各个独一无二的取值,每个连续型field都编码为一个定值。

- input_values是特征取值,分类型field特征取值变为1,连续性field特征取值不变

举个例子:

需要注意的

- second_order_part里面,分类型field和连续型field进行了交叉

- deep_part里面,embedding之后和特征值相乘,再接Dense

代码

1 | import tensorflow as tf |